Digital and technologies

Niccolò Bianchini,

Lorenzo Ancona

-

Available versions :

EN

Niccolò Bianchini

Lorenzo Ancona

Founder and editor of the newsletter Artifacts

To date, there is no universally accepted definition of artificial intelligence (AI). However, a number of definitions do capture the fundamental aspects, such as the one updated by the OECD, from an earlier version in 2019, which will probably be integrated into the law on artificial intelligence now under discussion in the European Union. This definition stipulates: “An AI system is one that is based on a machine which, for explicit or implicit purposes, deduces, from the inputs it receives, how to generate outputs such as predictions, content, recommendations or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptability once deployed”. This draws a broad perimeter, which is useful for framing the largest number of existing AI technologies and, although it does not consider the human component, it clarifies the operation of AI technologies as statistical inference systems which, from the processing of inputs, generate different types of output.

But this definition ignores other essential elements of artificial intelligence. As immediate as it is provocative, it must be made clear that AI is neither intelligent nor artificial. As Kate Crawford, co-founder of the University of New York’s AI Now Institute, artificial intelligence is not a synthetic product but “comprises natural resources, fuels, human labour, data, infrastructure and classifications”, which illustrate the extent to which economic, political and technical dynamics are central. As far as intelligence is concerned, Luciano Floridi, founder of the Digital Ethics Center of Yale University, speaks of “artefacts with the ability to act without being intelligent”, i.e. to carry out tasks without having any autonomous consciousness, but solely thanks to computing power and statistical inferences. These details are not mere philosophical flights of fancy, but the premises of a conscious reflection on the subject.

While the general public discovered AI with ChatGPT a year ago, it is worth pointing out that generative AI, which forms its base, is just one of the different types of AI, and that various AI applications were already present in our daily lives. These include the algorithms in social networks used to recommend content, predictive analysis for finance or programmes for diagnosing and personalising therapies in medicine.

Once these doubts have been dispelled, some of the considerations raised so far can be assessed. The transformative nature of this technology is clear : AI has the potential to revolutionise different areas of human experience and, more profoundly, to change reality and the very role of human beings within it[1]. Among the various fields of application, the world of work is under particular scrutiny, with several analyses which forecast major business changes and a significant impact on productivity.

The challenge of AI has recently been taken up by the international community, notably at the regulatory level. Great effort is being made to ensure future-proof legislative action, given the extremely rapid evolution of AI technologies, in particular the rise of fundamental models. Although a number of recent initiatives, such as the Executive Order issued by American President Biden, the summit on AI security at Bletchley Park, the G7’s Code of Conduct and the world AI governance initiative launched by China, reflect the growing awareness of this issue, the European Union is proving to be a forerunner in regulating this technology with the law on AI. This is why, according to Anu Bradford, a theoretician behind the Brussels Effect “it is reasonable to expect that the European regulatory role will continue. What's more, the European Union is influencing other players in this field: the United States is steadily moving towards the European model, abandoning one that is purely libertarian”[2].

Beyond this, the industrial development of AI technologies has been underway for some time, and is shaping up to be a new arena of fierce confrontation between global players. Indeed, it is likely that the market-led US model, the state-led Chinese model and the rights-led European model will clash not only in a global regulatory challenge, but also in terms of technological development.

The European vision and approach

The European Union seized the opportunity and challenge of Artificial Intelligence in 2018, with the publication of the document “Artificial intelligence for Europe” by the Commission, followed by a number of pledges, including the creation of the “Group of High Level Experts on AI” the publication of the “White Paper on AI”, and above all the proposed regulation of the AI Act in April 2021, which is currently in the discussion phase in the trialogues where it faces divergent positions.

The European approach is clear, at least in its intentions: to strengthen AI research and industrial capacity while guaranteeing fundamental rights. The inspiring principles are just as clear: the technological sovereignty of the European Union for strategic autonomy and the centrality of people in the digital transformation. Two areas, that of innovation and development and that of the protection of rights, which are not separate but interconnected: the European Union will only be able to assert its rules and values for AI in the digital age through its own solid and competitive ecosystem of excellence.

Despite the ambition of this approach, it must be acknowledged that the European Union is currently, at best, a secondary player in the development of AI. Far from being a surprise, this reflects the chronic slow progress of the European innovative sector. Lack of investment, an incomplete European single market, low attractiveness for European talent, a shortage of data and a regulatory jungle that is not always easy to understand are just some of the obstacles preventing Europe from becoming competitive and innovative - in short, a true global technological powerhouse.

Therefore, AI is not simply a new challenge to address, but a valuable opportunity to be turned into an advantage for the European innovation ecosystem as a whole. As Anu Bradford argues, “AI should be used as a wake-up call for the European Union. If it is not to be left behind, we must ensure that the European Union can innovate”. This is why, faced with the technological revolution brought about by AI, regulation alone is not enough: innovation is necessary. After all, creating wealth is preferable to regulating, and innovating is more satisfying than copying. For this reason, the European Union is committed to strengthening its position as an AI hub, by focusing on industrial development, the promotion of specialised companies and the effective adoption of these technologies on a large scale. This last point is essential: in the European Union, only 11% of non-specialist companies are using AI technologies, and projections indicate that by 2030 this figure will be only 20%, contrary to the target of 75%.

Given this urgency, here are some key challenges that need to be addressed if Europe is to be at the forefront of AI, and some concrete measures that could resolve the current impasse. The objective that the European Union must pursue is simple and a priority: to become a world leader in terms of both technological and industrial excellence and regulatory efficiency, or risk falling further behind.

Financing European AI

The low availability of venture-capital and a weak stock market are among the main causes of the AI industry's slow progress, preventing the development of a dynamic technological innovation sector for start-ups in the field. The scenario is even more alarming when compared to other global players: during the period 2012-2020, investments in venture-capital in the USA were ten times those in the euro area, while equity investment in AI in the European Union represents less than 10%, compared with a total of 80% for China and the United States, with a gap that will probably continue to grow. Moreover, as Figure 1 shows, the balance of power is disarming: only three European countries (Germany, France and Spain) are among the fifteen leading countries in terms of investment in AI, and US private investment is thirty-five times greater than that of Germany ($249 billion compared with $7 billion), the largest in Europe.

There are two reasons for this lack of investment. Firstly, the European banking model, based on risk assessment, imposes very strict rules, limiting risky investments such as those in start-ups. In this respect, large institutional investors, who can spend more, account for only 14% of the venture capital market, whereas in the United States they represent 35%, largely as a result of pension funds and university estates. Secondly, although private investment is increasing in the initial financing phases, European companies are in no way able to compete in the acquisition phases, with successful start-ups being systematically acquired by American companies. In this sense, the absence of AI technology companies of comparable size in Europe is one of the reasons why investment is stalling. This second dynamic has a number of implications, such as the exodus of talent, the loss of intellectual property and excessive dependence on American investors, all of which contribute to hindering the path towards European sovereignty.

Fig. 1 HAI - Artificial Intelligence Index Report 2023 -- page 190

It is true that the European Union, using the dual approach of regulation and innovation, has been able to develop a range of innovative products, and has planned several investments for the AI sector, which are growing in a stable manner. Whether this involves ‘VentureEU’ or Horizon Europe (previously Horizon 2020), the European Union is on the verge of reaching its target of €20 billion a year in public and private investment in AI. However, the lack of coordination in the management of these funds and the low level of synergy between Member States and also between their respective ecosystems is slowing the efficient use of these funds. For example, contrary to the provisions of the ‘Coordinated AI action plan’, annual monitoring of investment by Member State remains inadequate. This fragmentation weakens the European investment strategy, which has so far proved more effective in the regulatory legislative process with the AI Act.

Simplifying and streamlining the rules and coordinating investment are therefore the main policy objectives to be implemented. In detail, the rules of the European financial framework need to be revised to facilitate investment in start-ups, including the adoption of more flexible regulations for institutional investors. In addition, the European Union should move towards market integration and the movement of capital, along the lines of the 'Capital Markets Union’ (CMU). This would provide for a more efficient and ultimately more European financial sector that is not limited to national borders alone. Furthermore, the European Investment Bank (EIB) could promote diversified investment schemes that include institutional and private players as well as national banks, with the aim of greater integration and availability of resources. Finally, the role of the Member States should not be underestimated, as they could consider pooling plans to finance the development of AI, even outside the European budget if this proves necessary.

Building an ecosystem of excellence: a Union tailored to AI

The absence of an integrated innovation ecosystem constitutes a second major barrier to the establishment of the European Union as a dynamic centre of innovation and has serious implications for the emerging AI sector. Indeed, this not only limits the development and expansion of European excellence, but also threatens the role of the European Union in global competition. It is therefore a priority to create favourable conditions for the establishment of an ecosystem of excellence for Artificial Intelligence.

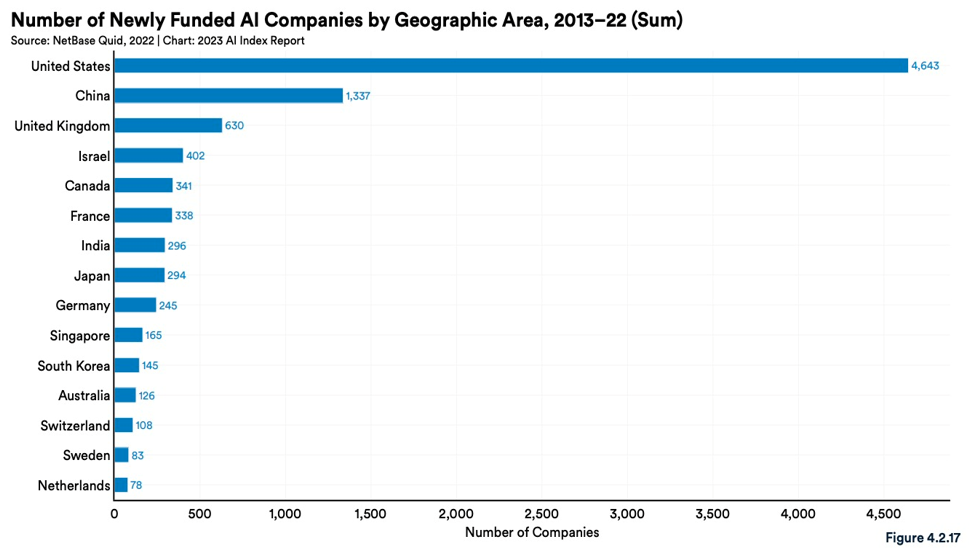

Economies of scale via the concentration, integration and the proximity of talent are the cornerstone of any successful innovative ecosystem. In this sense, it is imperative for the European Union to foster the integration of talent and capital, the development of a dynamic labour market and the promotion of European centres of excellence to give fresh impetus to an innovation ecosystem conducive to the germination and growth of start-ups and companies in the field of AI. This is particularly clear when we compare the forces at play: in the Forbes index only three European companies (Accenture, SAP and ASML) feature in the top twenty. Furthermore, as far as the creation of new AI companies is concerned, the dominant position of the United States and, to a lesser extent, China is not being challenged by the European Union, and even less by its Member States, as Figure 2 shows. The delay in the availability of data for the development of AI, the poor circulation of talent and the anaemic funding of AI projects are some of the most pertinent criticisms.

Fig. 2 HAI - Artificial Intelligence Index Report 2023 - - page 194

The main problems are the geographical fragmentation of innovation and the still incomplete European digital market. There is no shortage of AI innovation and development centres in Europe, but they are concentrated in a few regions (Île-de-France, Oberbayern and Noord-Brabant), illustrated by the low level of distribution of licenses and investment in AI. As a result of the low level of integration of the digital market, these companies have to navigate through the regulatory complexities of twenty-seven Member States and struggle to assert themselves on a European scale. The result is a slowing of the internationalization process for these national champions, with Member States often finding themselves in competition with each other, hampering the continent's competitiveness.

Reflecting comments recently made by Mario Draghi, commissioned to draw up a report on competitiveness, and perhaps above all, for AI, a more in-depth Union is needed. Firstly, as Anu Bradford notes: "We need to open up the digital single market. This is not a different challenge from the digital economy as a whole, but the economic stakes and risks associated with AI highlight the need to create a digital single market." The first step would be to complete the digital single market, without which it is difficult to imagine an AI-competitive Union on the world stage. However, this must be followed by concrete measures that foster a dynamic and integrated AI and innovation ecosystem. In this respect, the priority must be to accelerate interconnectivity between existing innovation clusters and to create new ones to promote synergies and generate economies of scale. The process to develop EU start-ups outside of their country of origin must also be simplified, since this is excessively complex and dependent on national legislation. In this sense, the creation of a European innovation passport would be useful, defining the status of a European innovation company subject to a single tax regime, uniform administrative procedures and coordinated labour policies aimed at retaining talent. A thought-provoking, admittedly ambitious idea, but one that is necessary if we are to pursue a strong objective: the development of a European AI ecosystem.

One promising initiative is represented by Kyutai, (sphere in Japanese). This $300 million laboratory, created by Xavier Niel, CEO of Free, Rodolphe Saadé, CEO of shipping group CMA CGM, and Eric Schmidt, former head of Google, hopes to form the heart of a French and European Silicon Valley. It resembles OpenAI in its original form: a non-profit research laboratory designed to build and experiment with large-scale language models, with the idea of being "open" and accessible to anyone wishing to use it for commercial purposes.

This initiative represents an important step towards empowering European start-ups. It offers them an alternative to dependence on or acquisition by non-European technology giants, by providing the infrastructure they need to support and grow independently.

Feeding AI: European data sovereignty

Data are often considered to be the new oil. Although this definition is increasingly contested, it is nevertheless real fuel for AI, both for research and development and for also its large-scale adoption by non-specialist users and companies. For this reason, the considerable delay in data availability and access is an urgent issue for Europe to resolve if it is to guarantee strategic autonomy and technological sovereignty in AI.

The reasons for this are linked to the digital industry ecosystem, with Europe suffering from the absence of its own champions. Firstly, as explained by the ‘European Data Strategy’, a few non-European 'Big Tech' companies hold the majority of the world's data, while European SMEs lack internal databases and have limited access to external ones. Moreover, the fragmentation of the European digital market is impeding the creation of common data sets, notably due to the lack of collaboration and sharing between private businesses, the institutions and other parties involved. This fragmentation also explains the discrepancy with the United States and China, which can rely respectively on two opposing, but equally centripetal forces for the construction of vast data sets: the private sector and central institutions.

These substantial consequences have a significant impact on the entire AI value chain. If the lack of data is weakening technology R&D activities, the lack of external databases and the poor availability of internal datasets are also mentioned by non-specialist companies as factors that are slowing down the adoption of AI technologies.

Effective policies must therefore address both the upstream and downstream dimensions of the AI industry, with the common goal of developing a solid European data infrastructure for the availability of start-ups and the adoption of SMEs. To this end, based on existing regulations such as the "Data Act" and the "Data Governance Act", care must be taken with their implementation to create Common European Data spaces. In particular, it would be useful to encourage data sharing practices, especially B2B, given that the private sector holds the majority of data and benefits from it for its own development, as well as B2G. Moreover, moving forward with the Interoperable Europe Act, about which the European Parliament and the Council came to an agreement on 13 November last, and guaranteeing its effective implementation will be decisive in terms of reducing the fragmentation and the resulting dispersion of data. This would lead, for example, to the interconnection of the digital public administrations of the Member States, today a sort of Penrose staircase for citizens deciding to live in another State of the Union, and would represent a fundamental step forward for mobile European citizens and therefore for the continent’s competitiveness. Furthermore, speeding up the creation of sectoral data spaces for the development of AI models for specific sectors, such as energy and health, would provide European citizens with better quality services. Finally, to encourage the adoption of AI by SMEs, it is a priority to support their investment in digitisation for internal data collection, along with tax deductions for innovative technologies and the hiring of specialised profiles, to make them ready to adopt and fully exploit the potential of AI.

Skills for AI: European expertise

When it comes to the European Union's competitiveness, it is impossible to ignore the role played by talent. Although AI is "artificial", it requires the availability of natural intelligence, i.e. skilled human capital, both for R&D activities and for the large-scale adoption of technologies.

The European Union does not suffer from a low production of talent, but from the inability to retain it. More than training, it is therefore urgent to act on the attractiveness of the AI cluster in Europe and its ability to attract talent.

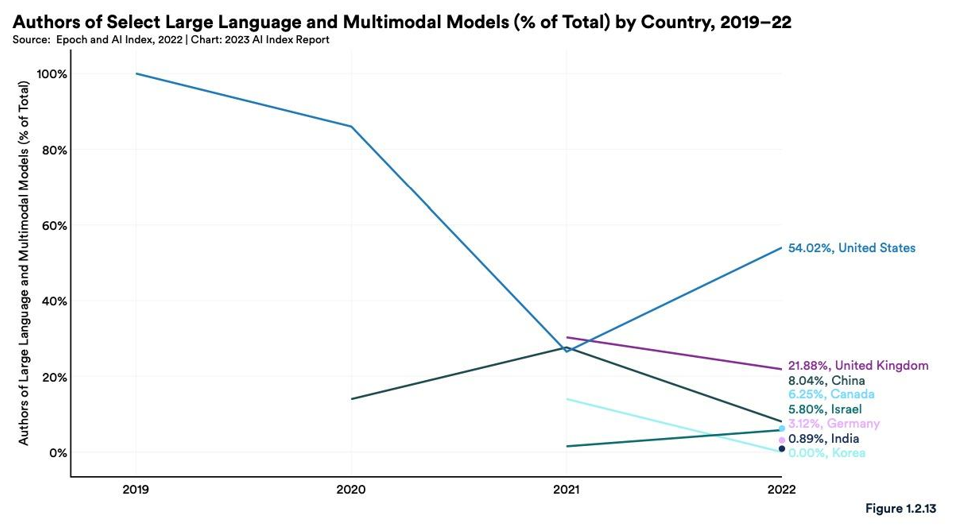

Regarding R&D, European academic research into IA is being severely tested by the increasing migration of human capital, particularly to the United States, where researchers are offered more attractive salaries, more flexible contracts and more prestigious academic and entrepreneurial positions. To give some idea of the scale of this brain-drain, consider the following example: one third of AI talent from American universities come from the EU. Even when it comes to transfers between the academic and business worlds, the rate of conversion of academic excellence into commercial and entrepreneurial opportunities is particularly low in Europe. To date, as Figure 3 shows, European researchers have not yet made a significant contribution to the major language models (Large Language Models -LLMs[3]): while in 2022, 54% of the creators of AI models were Americans, only 3% came from Germany, the leading European country. Two other interesting data points in this respect: 12% of company founders in Europe have a PhD, compared with 30% in the United States, while only a third of Europe's top AI talent is actively involved in the industry. So, it is not surprising that among the factors that European companies cite as the main obstacles to adopting AI is the shortage of talent on the labour market.

Fig. 3 HAI - Artificial Intelligence Index Report 2023 - page 58

The European Union's ambition must be to build a centre for AI R&D, capable of retaining European talent and also attracting talent trained in other countries. To achieve this, synergies between academia and the private sector need to be strengthened to channel investment into research, ensure better coordination of resources and promote the creation of European centres of excellence. R&D partnerships between universities and private players in specific areas of AI application would ensure a greater impact of academic publications and encourage greater availability of talent on the labour market, benefiting both the emergence of European excellence in AI and the adoption of technologies by non-specialist companies. In addition to training tomorrow's talent, it is also essential to act on existing human capital: investment in professional retraining is a priority for employees already on the labour market. In this way, existing non-specialist companies will be able to rely on their workforce to adopt AI technologies and remain competitive in an ever-changing working environment. The ambition of these measures is justified by the urgency of the challenge: to make the European Union a centre of excellence in AI so that it can catch up in terms of productivity and make rapid progress towards technological sovereignty.

Regulating AI: the way forward to stimulate innovation

In April 2021, explains Brando Benifei, the European Parliament's co-rapporteur for the AI law, "the European Union designed the AI law as a future-proof regulation. Thanks to the risk-based approach, which takes into account the possible emerging critical aspects of AI and not the technology itself, we have a regulatory framework that also applies to innovations that we do not see today, but that will appear in the future". In particular, it classifies AI applications into four different levels of risk: unacceptable, high, limited and minimal, and adapts regulatory obligations accordingly. The guiding principle is clear: not to hinder the development of new AI systems, while protecting the fundamental rights involved in the use of these technologies.

The problem is that innovation moves faster than regulation, and the risk is to spend time and resources in vain. This was highlighted by the advent of foundation models, such as GPT-4, which were not provided for in the Commission's text and which forced the European Union to consider an exception to the risk-based approach even before the text was approved and to reconcile the different positions on the issue. Indeed, given that these "algorithms are trained on a wide variety of data and are capable of automating a wide variety of discrete tasks", it is impossible to assess their risks and, consequently, to apply the model envisaged by the European Union. Hence, to ensure that AI legislation is not crippled from the outset or, worse still, that regulatory overkill does not stifle innovation, flexibility must be the watchword. This would mean providing for a regulatory approach based on the practical applications of the models and not on risk. In particular, a similar approach of differentiating foundation models according to their concrete application would allow for a diversification of requirements and obligations, in the ilk of the Digital Services Act (DSA) with Very Large Online Platforms (VLOPs) and Very Large Online Search Engines (VLOSEs). In pragmatic terms, registration in public databases would allow the measurement of the extent to which these models are used. To date, Brando Benifei stresses that "this would be a limited exception to the risk-based approach of the AI Act and it will always be a priority to ensure governance and sanctioning mechanisms in line with the rest of the regulations".

Flexibility must be the linchpin of the implementation of the AI regulation. Firstly, so that legislation does not crystallise the market in favour of the richest and most established companies, which are mostly non-European. It must also encourage the entry of emerging companies, which would otherwise be hampered by compliance costs, and also ensure that the allocation of responsibility along the AI value chain is clear. Suppliers, such as the European start-ups developing open source foundation models must also be covered by this so that they do not have to bear an excessive burden as soon as the model is published, likewise downstream users, such as SMEs, who cite fear of legal consequences as a barrier to adopting AI.

To achieve these complex but necessary goals, the proper functioning of the "AI Office", proposed by the European Parliament, should be ensured, which "will have the task of overseeing and updating the parameters in line with the development of technology, but above all of ensuring a coordinated implementation (supervision) of the AI law regulations at European level". Specifically, such an actor could periodically adapt AI law, reduce coordination costs between specific sectors, provide detailed guidelines for compliance and ensure alignment with other European regulations.

Ultimately, regulation must rhyme with innovation. Indeed, the law must be part of a coherent vision that allows Europe to establish itself as both a regulator and an innovator in artificial intelligence. After all, promoting our own technologies is the most effective way of asserting our own rules.

***

The AI revolution represents a unique opportunity that Europe cannot afford to miss. Over the last fifteen years, which have seen it lose ground to the United States and, to a lesser extent, China, the Old Continent has too often appeared to be the "continent of the old". Not so much from a demographic point of view, but above all because of a widespread mistrust of innovation, a reluctance to take risks, and an alarmism about the dangers of the unknown that is constantly highlighted against an optimism about the opportunities of progress. After all, innovation is measured in the marketplace, where the European Union has, at most, a supporting role.

The European project, rediscovering the ambition that presided over its conception, must once again inspire its citizens to dream. AI is the ideal ally: it can be an engine for growth, a lever for catching up in terms of productivity and wage growth, and a fertile breeding ground where European creativity, genius and talent can germinate and flourish. The Renaissance, which began in Florence in the 14th and 15th centuries and helped to revitalise the whole of Europe artistically, economically and scientifically, rested on two pillars: a return to Antiquity and classical knowledge, and the realisation that the medieval era was over. In the same way, Europe should return to the old founding principles of the single market based on de facto solidarity, competition for innovation and economic development for the benefit of people and recognise that the post-Cold War period of globalisation is now over. To play a leading role in this new world, Europe must embrace innovation, Europe must embrace AI, and above all Europe must start dreaming again.

[1] The Age of AI by Henry A Kissinger | Hachette Book Group

[2] All quotes come from interviews conducted by the authors for this study.

[3] A large language model (LLM) is a specialised type of artificial intelligence (AI) that has been trained on large amounts of text with the aim of understanding existing content and generating original content.

Publishing Director : Pascale Joannin

To go further

Agriculture

Bernard Bourget

—

17 February 2026

European Identities

Patrice Cardot

—

10 February 2026

The EU and Globalisation

Olena Oliinyk

—

3 February 2026

Strategy, Security and Defence

Jean Mafart

—

27 January 2026

The Letter

Schuman

European news of the week

Unique in its genre, with its 200,000 subscribers and its editions in 6 languages (French, English, German, Spanish, Polish and Ukrainian), it has brought to you, for 15 years, a summary of European news, more needed now than ever

Versions :